Archive

What is Ventoy, and why you should always have one!

TL;DR;

Before I get to ranting, here is the quick answer:

Ventoy is a Free Software multi-platform tool that lets you boot ISO files from a USB drive.

Key features that make it different from other tools like Etcher or Unetbootin are:

- You don’t need to “flash” the ISO to the drive – just copy like a regular file!

- You can still use your drive normally to carry other files around!

- You can put as many ISO files as will fit on the same USB stick and choose which to boot!

- It supports GNU/Linux, BSD, and even Windows ISO files!

The rant:

One thing I hate the most about GNOME project is the fact that starting with version 3, they been systematically destroying their utility apps.

Calculator button layout has become a ridiculous mess, setting Alt + Shift to switch keyboard layout requires a 3rd party tweak tool, and my biggest gripe: the “startup disk creator” has been completely boarked!

Back in 2007 I was able to use GNOME 2’s built in tool to create a persistent live USB stick for a friend in need with just a couple of clicks, while simultaneously preserving the files he already had on that USB drive!

Fast forward to 2021 and all I get is a thin GUI wrapper for dd command, which not only does not allow creating a persistent installation, but also converts any USB drive in to a 2GB DVD messing up partitioning and even sector size!

Sure, it boots, but it is unusable for anything else, even flashing a different image, unless I clean it up with GParted first.

It is almost like the GNOME project intentionally tries to sabotage it self by becoming more and more useless!

My current predicament:

Even as I type this post my home desktop computer still runs Ubuntu 16.04.

One of the major reasons I am running a 5 year old version of Ubuntu despite their regular release schedule is the rant above – I did not want to update many of the GNOME components Ubuntu relies on to their newer, more decrepit versions. (And no, I don’t want to switch to KDE, thank you.)

But now the time has come where I can’t put it off any longer:

Arduino Studio 2.0 requires newer glibc, many of the Python scripts I write use 3.9 features, and some free software projects I really want to build require higher versions of cmake than that wich comes with my current distribution.

Plus, even though this is an LTS version, it will be nearing its end of life soon…

So I tried to run dist-upgrade only to find out that all the messing around with repositories and packages I did over the years messed up my setup so much, it can no longer upgrade.

After trying to solve the issue for a few days with no success, I decided the simplest way forward was to just install a newer version over the old one.

Though my home and my boot partitions are separate, I still took the time to image both with Clonezilla to a backup removable drive.

And a good thing too, as I got careless and managed to destroy both partitions during the upgrade attempt, even though they are on separate physical drives!

Fortunately, the full disk image backup worked like a charm, and I did not loose anything other than a few hours of my life.

But the problem was this:

First, I needed a bootable USB stick with Clonezilla, then one with latest version of Ubuntu, then Clonezilla again, then one with older version of Ubuntu to try again (because I suspected the installation failed because my system does not support UEFI).

I don’t have a bunch of USB drives lying around, and reflashing the same drive with my laptop was a pain in the ass.

Today I went to get a few things from my local computer store and was going to get another 32GB Sandisk stick to avoid this hassle in the future.

They didn’t have one on display, but they did have a Kingston 128GB stick, so before sending the nice clerk to fish one out of the storage room I asked how much the 128GB USB stick cost.

Turns out it was less then a ribs serving at my favorite restaurant.

So I got the bigger stick instead, and remembering hearing about this “Ventoy” thing somewhere decided to give it a try.

The experience:

Though they do not have repositories, not even snap, they do provide a statically linked binary which can be run after unpacking from the tar file without installation.

The interface is super simple, though I have not tried their persistence feature yet, which requires running a separate script.

The tool warned me (twice!) that all the data will be deleted from my USB drive, but when it was done I still had a clean 127GB drive with no garbage files on it, and no messed up partitions.

Then I dropped a few ISO files on it.

I have a tendency to keep all the ISOs I try out, and I tried out a bunch of OS’s from Puppy Linux to PC BSD back in the day, so it was a matter of a few minutes to copy them over USB 3 port.

And…

It worked just as advertised!

I got a pretty graphical menu letting me select any of the ISOs I put on the drive, and then the selected one just booted.

PC BSD couldn’t get in to a GUI and dropped me to console, but I suspect this has to do with my new nVidia card. This ISO is very old, I don’t remember when I got it, and it seems PC BSD has been deprecated for years (I don’t really follow the BSD world).

I will try out Midnight BSD 2.1.0 later on, as I am curious both about how well BSD is supported by Ventoy and what modern desktop BSD looks like.

Ubuntu 21.04 booted without issue.

Conclusion:

Instead of a handful of thumb drive strung around my desk I now have one drive that serves all my needs:

I can use to to back up my files and whole disks, I can use it to install old and new Ubuntu distros, I can use it to try out new OSs, and I will probably add the Rescue CD image to it later just in case.

I even have a DBAN ISO on there, though I am not planning on nuking any drives any time soon.

And after all that I still have 117.9 GB free on the drive, and only 5 distinct ISO files in the root directory, so I can freely use this drive to carry around other stuff without it mixing in with the OS install files and folders.

And I can add more ISOs any time, in minutes, without any special tool (Ventoy is not needed after installing the bootloader).

There is no configuration, nothing!

Which is why I highly recommend this tool!

It lets you keep a single USB stick as a multitool for all your external booting needs, from installing new OS to fixing and backing up.

And its super easy!

After trying out several different “flashers” in the past, I found this particular tool so useful and convenient that I donated 20$ to the developer to show my support.

Heck, it may even be good for the environment by reducing e-waste through reducing the number of USB sticks people like me will need.

Those things do break pretty easily, especially ones with full plastic casing, even from reputable manufacturers (which is why I now only buy ones with metal casings, though that still does not guarantee survivability of the chips inside).

Disclaimer:

I don’t really know anything about the developer behind this project, or the project it self beyond what I experienced using it.

I have not audited the code.

So don’t rely solely on my excitement in this post and check it out for your self!

The code is available here and here, it is under GPL v3+ which is an excellent choice that shows the developer really cares about freedom of users down the line.

Update:

I should have read the fine print on MidnightBSD. It is not a LiveCD, it is just an installer.

And it does not like my system at all…

Trying to run it directly from a USB drive (using dd) didn’t even get past initial bootloader, while via Ventoy it scanned all my hardware, switched to graphical mode momentarily, then prompted me for “boot device”, which I didn’t know so I could not continue.

Guess they really need to work on their user friendliness, or be less picky about who they befriend 😛

Maybe I will give them a try on VirtualBox after I am done with my system upgrade…

How “undefined” is undefined behavior?

I was browsing StackOverflow yesterday, and encountered an interesting question.

Unfortunately, that question was closed almost immediately, with comments stating: “this is undefined behavior” as if there was no explanation to what was happening.

But here is the twist: the author of the question knew already it was “undefined behavior”, but he was getting consistent results, and wanted to know why.

We all know computers are not random. In fact, getting actual random numbers is so hard for a computer, some people result to using lava lamps to get them.

So what is this “undefined behavior”?

Why are novice programmers, especially in C and C++ are always thought that if you do something that leads to undefined behavior, your computer may explode?

Well, I guess because that’s the easier explanation?

Programming languages that are free for anyone to implement, such as C and C++, have very long, very complicated standard documents describing them.

But programmers using these languages seldom read the standard.

Compiler makers do.

So what does “undefined behavior” really mean?

It means the people who wrote the standard don’t care about a particular edge case, or consider it too complicated to handle in a standard way.

So they say to the compiler writers: “programmers shouldn’t do this, but if they do, it is your problem!”.

This means that for every so called “undefined behavior” a specific code will be produced by the compiler, that will do the same specific thing every time the program is ran.

And that means, that as long as you don’t recompile your source with a different compiler, you will be getting consistent results.

The only caveat being that many undefined behavior cases involve code that handles uninitialized or improperly initialized memory, and so if memory values change between runs of the program, results may also change.

The SO question

In the SO question this code was given:

#include <iostream>

using namespace std;

int main() {

char s1[] = {'a', 'b', 'c'};

char s2[] = "abc";

cout << s1 << endl;

cout << s2 << endl;

}

According to the person who posted it, when using some online compiler this code consistently printed the first string with an extra character at the end, and the second string correctly.

However, if the line return 0 was added to the code, it would consistently print both strings correctly.

The undefined behavior happens in line 8 where the code tries to print s1 which is not null terminated.

When I ran this code on my desktop machine with g++ 5.5.0 (yes, I am on an old distro), I got a consistent result that looked like this:

abcabc

abc

Adding and removing return 0 did not change the result.

So it seems that the SO crowd were right: this is some random, unreproducible behavior.

Or is it?

The investigation

I decided I wanted to know what the code was really doing.

Will the result change if I reboot the computer?

Will it change if I ran the binary on another computer?

It is tempting to say “yes” because I am sure that like me, at least some of you imagine that without the \0 character in the s1 array, cout will continue trying to print some random memory that may contain anything.

But, that is not at all what happened here!

In fact, I was surprised to learn that my binary will consistently and reliably print the output shown above, and even changing compiler optimization flags didn’t do anything to alter that.

To find out what the code was really doing, I asked the compiler to output assembly instead of machine code.

This reveled some very interesting things:

First, return 0 doesn’t matter!

Turns out, regardless of me adding the line, gcc would output the code needed to cleanly exit main with return value 0 every time:

movl $0, %eax

movq -8(%rbp), %rdx

xorq %fs:40, %rdx

je .L3

call __stack_chk_fail

.L3:

leave

Notice the first line: it is Linux x86-64 ABI convention to return the result in EAX register.

The rest is just cleanup code that is also automatically generated.

Interestingly enough, turning on optimization will replace this line with xorl %eax, %eax wich is considered faster on x86 architecture.

So, now we know that at least on my version of gcc the return statement makes no changes to the code, but what about the strings?

I expected the string literal "abc" to be stored somewhere in the data segment of the produced assembly file, but I could not find it.

Instead, I found this code for local variable initialization that is pushing values to the stack:

movb $97, -15(%rbp)

movb $98, -14(%rbp)

movb $99, -13(%rbp)

movl $6710628, -12(%rbp)

The first 3 lines are pretty obvious: these are ASCII codes for ‘a’, ‘b’ and ‘c’, so this is our s1 array.

But what is this 6710628 number?

Is it a pointer? Can’t be! It’s impossible to hardcode pointers on modern systems, and what would it point to, anyway?

The string “abc” is nowhere to be found in the compiler output… Or is it?

If we look at this value in hex, it all becomes clear:

+----+----+----+----+ +---+---+---+----+

6710628 = 0x00666564 = | 64 | 65 | 66 | 00 | = | a | b | c | \0 |

+----+----+----+----+ +---+---+---+----+

Yep, that’s our string!

Looks like despite optimizations being turned off, gcc decided to take this 4 byte long string and turn it in to an immediate 32 bit value (keep in mind x86 is little-endian).

So there you have it: the code the compiler generates pushes the byte values of both arrays on to the stack in sequence.

So no matter what, the resulting binary will find the null character at the end of the second string (s2) and will never try to print random values from memory it is not suppose to access.

Of course, it is possible that a different compiler, a different version of gcc, or just compiling for a different architecture will compile this code in a different way, that would result in a different behavior.

There is no guarantee these two local variables will wind up on the stack neatly in a row.

But for now, we seem to have a well defined behavior, dough it is not defined by the C++ standard, but by the generated code.

I didn’t write this post to encourage anyone to use code like this anywhere or to claim that we should ignore “undefined behavior”.

I simply want to point out that sometimes it can be educational to look at what your high level code is really doing under the hood, and that with computers, there is often order where people think there is chaos, even if this order isn’t immediately apparent.

I think this question would have made for an interesting and educational discussion for SO, and perhaps next time one comes up, someone will give a more detailed answer before it is closed.

I certainly learned something interesting today.

Hope someone reading this will too!

Cheers.

Linux new Code of Conduct

If you have been following technology news, specifically related to free software or open source, even more specifically related to Linux, you probably already know 2 things:

- Linus Torvalds went on vacation to “improve his people skills”

- Linux Kernel got a sudden and surprising new “Code of Conduct”

Since I am not a kernel developer or an insider of any kind, I have no new information on these issues.

The purpose of this post is to arrange for me and anyone interested, the facts about this situation, in chronological order.

Also, there is a very good Youtube video that analyzes in depth the new CoC but unfortunately it is “unlisted” so will not show in search results.

I wanted to have another place on the web that links to it.

If you want to understand why people complain about this particular CoC, you must watch this video!

No – it is not because developers like being rude and want to keep doing it!

The facts so far:

- On September 15, 2018 Greg Kroah-Hartman made a commit to the kernel Git repository replacing the existing “Code of Conflict” with a new “Code of Conduct” taken from this site.All but one kernel maintainers signed off on this commit.

The one who refused was promptly accused of being a “rape apologist” for his refusal.The thing to be concerned about here is the “post meritocracy” manifesto published by the same people who produced the CoC.

This manifesto goes against the guiding principle behind Linux and most other free software projects: people are judged only by the quality of their work, and nothing else! - The next day, September 16, 2018 Linus Torvalds posted to the Kernel mailing list that he is “going to take time off and get some assistance on how to understand people’s emotions and respond appropriately.”Linus did not state that this is a permanent retirement from kernel development.

But, he also did not specify when he will be back. - On the same day, Coraline Ada Ehmke, the main author of the CoC twitted the following inflammatory post:

I can’t wait for the mass exodus from Linux now that it’s been infiltrated by SJWs. Hahahah

For many this confirmed a malicious intent from the creators of the CoC suspected due to the anti-meritocracy manifesto.

- On the next day, September 17, 2018 Coraline confirmed that the CoC was a political document.It should be noted that Coraline has never contributed a single line of code to the Linux kernel.

- On September 19, 2018 The New Yorker published a character assassination piece on Linus Torvalds.Many on the net speculated that Linus “chose” to take his time off because the New Yorker contacted him before publishing the article, and this was his attempt to mitigate what was published.

- On September 20, 2018 an anonymous user unconditionedwitness posted to the kernel mailing list a claim that there is legal possibility for any developer harmed by the new CoC to demand removal of all their code from the kernel.

- On September 23, 2018 Eric S. Raymond posted to the LKML “On holy wars, and a plea for peace” where he stated “this threat has teeth”.

He claims to have researched the issue and thinks unconditionedwitness may be correct.Unfortunately, Eric is not a lawyer. - On September 26, 2018, ZDNet published an article, attempting to present legal opinion on the possibility of removing code under GPL v2 from the kernel.Unfortunately, they did not interview any lawyers regarding current situation, just quoted old statements.

Still, it seems that the possibility of code being removed from the kernel is legally dubious at best. - On October 7, 2018 Phoronix reported that some prominent kernel developers were submitting patches to the CoC in an attempt to mitigate the harm it will cause and make it more usable.Unfortunately, these patches do not address all the problems (see the analysis video), and it is not yet known if they will be accepted.

- On October 15, 2018 Phoronix again reported that as of Release Candidate 8 for the upcoming 4.19 kernel no changes to the CoC were accepted.

So basically, previously mentioned patches were thrown out.There is however another attempt, this time by developer from RedHat to submit more patches trying to fix the CoC. - On October 20, 2018 (Latest news as of the time of this writing), Phoronix reported that though all previous patches were rejected, Greg Kroah-Hartman himself submitted a patch and a new “interpretation” document for the CoC.It is interesting to note, that the interpretation document is longer that the CoC it self, which brings up the question: how bad does a document need to be for it to require such a long interpretation guideline?

Can you imagine a comment that is longer then the function it documents?

Would you accept such code?Also note, that these changes were discussed privately, and only a very short time before 4.19 release was given to the developer community at large to consider them.

Summary:

It is clear from these events that there is an attack on the Linux kernel project.

Linus Torvalds, the creator of the project was driven out by using prime time media and political pressure, and at the same time a highly controversial at best, and plain destructive a worst, binding document was forced upon the community with no warning and no discussion.

I do not believe anything like this has happened before in the lifetime of the kernel project.

It should be noted however, that as of the time this post was written no other developer besides Linus have been driven off the project or decided to leave of their one free will.

No one is trying to pull code out yet.

Analysis of the CoC:

Some month before the CoC hit Linux kernel, Paul M. Jones, a PHP developer, gave a presentation detailing all the problems with the CoC.

It is an hour long, and the video and audio quality is not the best, but the content is excellent. If you are wondering what is so bad about the CoC that people like me call it and “attack”, you must watch this video.

You should also read this post and this one.

I was going to add my own analysis here, but this post turned out longer than I expected, so I will write up my own dissection of the CoC in a separate post.

Most of it is covered in the video anyway, please watch it!

How not to protect your app

So, I went to Droidcon this week.

And to be honest, it disappointed me almost in every parameter: from content to catering.

I don’t go to many conventions, but compared to August penguin that costs about one tenth for a ticket, Droidcon was surprisingly low quality.

The agenda had not one but two presentations on how to “protect your app from hackers”, and unfortunately, both could be summed up in one word: obfuscate.

Obfuscation is the worst way to secure any software, and Android applications are no different in this regard. If anything, since smartphones today often contain more sensitive and valuable user data then PCs, it is even more important to use real security in mobile apps!

Obfuscation is bad because:

- It is difficult and costly to implement but relatively easy to break.

- It prevents, or at least significantly reduces your ability to debug your app and resolve user reported issues.

- It only needs to be broken once to be broken everywhere, always for any user.

- Fixing it once broken is almost impossible.

Basically, obfuscation is what security experts call “security by obscurity” which is considered very insecure.

Consider this: the entire Internet, including most banking and financial sites runs almost completely on open source software and standard, open, and documented protocols: Apache, NGINX, OpenSSL, Firefox, Chrome.

OpenSSL is particularly interesting, because it provides encryption that is good enough for the most sensitive information on the net, yet it is completely open.

Even the infamous “Dark Web” or more specifically the Tor network, is completely open source.

Truly secure software design does not rely on others not understanding what your code does.

Even malware writers, who’s entire bread and butter depends on hiding what their apps are doing, no longer rely on obfuscation but instead moved on to full blown code encryption and delivering code on demand from a server.

This is because obfuscated code was too easy for security researchers, and even fully automated malware scanners to detect.

If you want to learn more about this, follow security company blogs such as Semantek, Kaspersky and Checkpoint. Their researchers sometimes publish very interesting malware analysis showing the tricks malware writers use to hide their evil code.

Specifically bad advice

Now I would like to go over some specific advice given in one presentation, that I consider to be particularly bad, some of it to the point where I would call it “anti-advice”:

- Use reflection

- Hide things in “native”

- Hide data with protobufs or similar

- Hide code with ProGuard or similar

I listed these “recommendations” from most to least harmful.

If you are considering using any of these technics in your app, please read the following explanation before doing so, and reconsider.

1. Using reflection

Reflection is a powerful tool, but it was not designed to hide code.

If you use reflection to call a method in a class, anyone looking at a disassembled version of your app, or even running simple “strings” utility on it will still see all the method and class names.

You will gain nothing but loose any protection from bugs and crashes that compiler checks and lint tools normally provide.

You could go as far as scramble (or even fully encrypt) the strings holding the names of the classes and methods you call with reflection and only decipher them at run time.

But this takes long time to implement, is very error prone, and will make your app slower since it has a lot more work to do for a single, simple function call.

Stop and think carefully: what would a “hacker” analyzing your app gain from knowing what API you are calling?

The answer will always be: nothing, unless your app is very badly written!

Most security apps, like password managers, advertise exactly what encryption they are using so their customers would know how secure they are.

In a truly secure app, knowing what the app does, will not help a bad guy break it in any way.

I challenge anyone to give me an example in the comments of an API call that is worth hiding in a legitimate app.

2. Hide things in “native”

If you are not familiar with JNI and writing C and C++ code for Android, go read up on it, but not in order to protect your app!

Because Java (and Kotlin) still have performance issues when it comes to certain types of tasks (specifically, games and graphics related code), developers at Google created the Native Development Kit – NDK, to let you include native C and C++ code in your app that will run directly on the device processor and not the JVM (Dalvik / ART).

But just as with reflection, the NDK was not designed to hide your code from prying eyes.

And thus, it will not hide anything!

To many Java developers, particularly ones with no experiences or knowledge developing in native (to the hardware) languages like C, a SO binary file will look like complete gibberish even in a hex editor.

But, just because you don’t understand what you are looking at, does not mean someone trying to crack your app will have a hard time understanding it!

A so file is a standard library used on Linux systems for decades (remember: Android is running on top of the Linux kernel), so there are lots of tools out there to decompile and analyze them.

But your attacker probably won’t even need to decompile your so file. If all they are looking for is some string, like a hard-coded password or API token you put in your app, they can still see it with a simple “strings” utility, same as they would in your Java or Kotlin code. There is no magic here – all strings remain intact when compiling to native.

Also, any external functions or methods your native code calls will appear in the binary file as plain text strings – your OS needs to find them to call them, so they will be there, exposed just like in any other language!

But things can get worse: lets say you have some valuable business logic in your app, and you want to make it harder for hackers to decompile your code and see this logic.

It is true that when you compile Java source, a lot more information about the original code is preserved than when compiling C or C++.

But don’t be tempted by this, because you may leave your self even more exposed!

If a hacker really wants to run your code for his (or hers) nefarious purposes, then wrapping it up in a library that can easily be called from any app is like gift wrapping it for them.

And this is exactly what you will be doing if you put your code in a native SO file: you are putting it in an easy to use library!

Instead of decompiling your code and rewriting it in their app, the hacker can just take your SO file, and call its functions (that must be exposed to work!) from their own app.

They don’t need to know what your code does, they can just feed it parameters and get the result, which is what they really wanted in the first place.

So instead of hampering hacking, you make it easier by using the wrong tool, all the while giving your self a false sense of security.

4. Hide data with protobufs or similar

By now you should have noticed a pattern: one thing all these bad advice have in common is recommending the wrong tool for the job.

Protobufs is an excellent open source tool for data serialization.

It is not a security tool!

The actual advice given in the presentation was to replace JSON in server responses with protobufs in order to make the information sent by the server less readable.

But what security do you gain from this? If your server sends a reply like this:

{

"first_name" : "Jhon",

"last_name" : "Smith",

"phone" : "555-12345",

"email" : "jhon@email.com"

}

converting this structure to a protobuf will look something like this:

xxxxJhonxxxxSmithxxxx555-12345xxxjhon@email.com

Is that really hiding anything?

Protobufs are more compact then JSON, and they can be deserialized faster and easier than JSON but they also have some disadvantages: they are not as flexible as JSON.

It is hard to support optional fields with protobuffs and even harder to create dynamic or self describing objects.

If your app needs flexibility in parsing server replies, or if you have other clients, particularly web clients written with JS that access the same server API, JSON may be the better choice for you.

When deciding whether to use JSON or protobuffs, consider their advantages and disadvantages for your use case, DO NOT CONSIDER SECURITY!

They are both equally insecure, and you will need encryption (always use SSL!) and proper access validation (passwords, tokens, client certificates) if you want to keep your data safe.

4. Hide code with ProGuard or similar

This advice actually talks about the right tool for a change: ProGuard.

This is a tool Google ships with Android Studio, and it does two things: reduces the size of your code and resources after compilation, and slightly obfuscates your code.

This is not a bad tool, but it comes with a cost, and it won’t really give you protection from hackers.

It will rename your methods like getMySecretPassword() to a() but will that really stop anyone from doing anything bad?

At best, it will slow them down, but keep in mind that it will also cost you:

ProGuard has the side effect of rendering all stack traces useless and making debugging the app extremely difficult.

There is a way to mitigate this: you need to keep a special translation file for every single build of your app (because ProGuard randomizes its name mangling).

If you need to support users in production and don’t want to be helpless or work extra hard when they report a crash, you might want to give up on ProGuard.

Also keep in mind that you need to carefully tell ProGuard what not to obfuscate, since you must keep any external API calls, components declared in the manifest and some third party library calls intact, or your app will not run.

Remember – ProGuard will:

- Not keep any hardcoded strings safe.

- Not keep your user password safe if you store it as plain text in your app data folder.

- Not keep your communication safe if you do not use SSL.

- Not protect you from MITM attacks if you do not use certificate pinning.

ProGuard might make your final APK file smaller by getting rid of unused code and reducing length of class and methods names, but you should carefully consider the cost of this reduction you will pay when dealing with bugs and crashes.

I find it is usually just not worth the hassle.

And there are better tools now for reducing download size, such as App Bundles.

Summary

Messing with your code will never make your app more secure. It will not protect you from hackers.

Even if you do not want, or can not, release your app as open source, you still need to remember that trying to hide its code with obfuscation will cost you more then having your app reverse engineered.

The development, debugging, and user support costs can be as devastating as any hacks!

But, if you treat your code as though it is meant to be open, and make sure that even if a bad person can read and understand everything your app does they still can not get your users data or exploit your web server, then, and only then, will your app be truly secure.

And doing that is often easier and cheaper than trying to obfuscate your code or data.

P. S.

One of the presentations mentioned a phenomenon I was not familiar with: “App cloning”.

Apparently, if you publish an ad supported app, some bad people can take your app without your permission, replace your advertisement API keys with their own, and release the app to some unofficial app stores like the ones that are common in China (because Google Play is blocked there by the government).

This way, they will get ad revenue from your app instead of you.

But consider this: would you publish your app to these stores?

If your answer is “no”, then you are not losing anything!

You will never get any money from these users because they will never be able to install your original app, so any effort you put in defending against “cloning” will be a net financial loss to you.

Remember – as a developer, your time is money!

P. S. 2

Someone in the audience asked about Google API keys like the Google Maps API key.

Usually, it is bad practice to put API keys in plain text in the manifest of your app, because anyone can get them from there and use a paid API at your expense.

But this is not the case with Google API keys!

The reason Google tells you to put the key in the manifest, is because Google designed these API keys in such a way, that they will be useless to anyone but you, so stealing them is pointless.

This is a great example of a good security design: instead of relying on app developers to figure out how to distribute an API key to millions of users but keep it safe from hackers, Google tide the key to your signing certificate and your app id (package name).

When you create the API key, you must enter your certificate fingerprint and your package name.

Your private key – the one you use to sign your apps for release, is something most developers already keep very safe. There is never a reason to send it anywhere and it would never be included in the app itself.

It will stay safe on the developers computer.

And without this private key, the public API key will not work.

If it is used in an app signed by anyone else, even if that app fakes your app’s id, the API key will still be invalid.

This is how you secure apps!

Say hello to the new math!

Yesterday at my company, some of my department stopped work for a day to participate in an “enrichment” course.

We are a large company that can afford such things, and it can be interesting and useful.

We even have the facilities for lectures right at the office (usually used for instructing new hires) so it is very convenient to invite an expert lecturer from time to time.

This time the lecture was about Angular.

As I am not a web developer, I was not invited, but the lecture room is close to my cubicle so during the launch break I got talking to some of the devs that participated. And of course we got into an argument about programming languages, particularly JavaScript.

I wrote before on this blog that I hate JS with a vengeance. I think it deserves to be called the worst programming language ever developed.

Here are my three main peeves with it:

- The == operator. When you read a programming book for beginners that teaches a specific language and it tells you to avoid using a basic operator because the logic behind it is so convoluted even experienced programmers will have hard time predicting the result of a comparison, it kills the language for me.I know JS isn’t the only language to use == and ===, but that does not make it any less awful!

- It has the

evalbuilt in function. I already dedicated a post to my thoughts on this. - The language is now in its 6th major iteration, and everyone uses it as OO, but it still lacks proper syntax support for classes and other OO features.

This makes any serious piece of modern code written in it exceedingly messy and ugly.

But if those 3 items weren’t enough to prefer Brainfuck over JS, yesterday I got a brand new reason: it implements new math!

Turns out, if you divide by 0 in JS YOU GET INFINITY!!!

Yes, I am shouting. I have a huge problem with this. Because it breaks math.

Computers rely on math to work. Computer programs can not break it. This is beyond ridiculous!

I know JS was originally designed to allow people who were not programmers (like graphics designers) to build websites with cool looking features like animated menus. So the entire language was built upon “on error resume next“ paradigm.

And this was ok for its original purpose, but not when the language has grown to be used for building word processors, spreadsheet editors, and a whole ecosystem of complex applications billions of people use every day.

One of the first things every programmer is taught is to be ready to catch and properly handle errors in their code.

I am not a mathematician. In fact, math is part of the reason I never finished my computer science degree. But even I know you can not divide by zero.

Not because your calculator will show an error message. But because it brakes the basic rules of arithmetic.

Think of it this way:

if 5 ÷ 1 = 5 == 1 × 5 = 5 then 5 ÷ 0 = ∞ == 0 × ∞ = 5 Oops, you just broke devision, multiplication and addition.

But maybe I am not explaining this right? Maybe I am missing something?

Try this TED-ed video instead, it does a much better job:

If JS wanted to avoid exception and keep the math, they could have put a NaN there.

But they didn’t. And they broke the rules of the universe.

So JS sucks, and since it is so prevalent and keeps growing, we are all doomed.

Something to think about… 😛

Sneaking features through the back door

Sometimes programming language developers decide that certain practices are bad, so bad that they try to prevent their use through the language they develop.

For example: In both Java and C# multiple inheritance is not allowed. The language standard prohibits it, so trying to specify more than one base class will result in compiler error.

Another blocking “feature” these languages share, is a syntax preventing creation of derivative classes all together.

For Java, it is declaring a class to be final which might be a bit confusing for new users, since the same keyword is used to declare constants.

As an example, this will not compile:

public final class YouCanNotExtendMe {

...

}

public class TryingAnyway extends YouCanNotExtendMe {

...

}

For C# just replace final with sealed.

This can also be applied to specific methods instead of the entire class, to prevent overriding, in both languages.

While application developers may not find many uses for this feature, it shows up even in the Java standard library. Just try extending the built-in String class.

But, language features are tools in a tool box.

Each one can be both useful and misused or abused. It depends solely on the developer using the tools.

And that is why as languages evolve over the years, on some occasions their developers give up fighting the users and add some things they left out at the beginning.

Usually in a sneaky, roundabout way, to avoid admitting they were wrong or that they succumbed to peer pressure.

In this post, I will show two examples of such features, one from Java, and one from C#.

C# Extension methods

In version 3.0 of C# a new feature was added to the language: “extension methods”.

Just as their name suggests, they can be used to extend any class, including a sealed class. And you do not need access to the class implementation to use them. Just write your own class with a static method (or as many methods as you want), that has the first parameter denoted by the keyword this and of the type you want to extend.

Microsoft’s own guide gives an example of adding a method to the built in sealed String type.

Those who know and use C# will probably argue that there are two key differences between extension methods and derived classes:

- Extension methods do not create a new type.

Personally, I think that will only effect compile time checks, which can be replaced with run time checks if not all instances of the ‘base’ class can be extended.

Also, a creative workaround may be possible with attributes. - Existing methods can not be overridden by extension methods.

This is a major drawback, and I can not think of a workaround for it.

But, you can still overload methods. And who knows what will be added in the future…

So it may not be complete, but a way to break class seals was added to the language after only two major iterations.

Multiple inheritance in Java through interfaces

Java has two separate mechanisms to support polymorphism: inheritance and interfaces.

A Java class can have only one base class it inherits from, but can implement many interfaces, and so can be referenced through these interface types.

public interface IfaceA {

void methodA();

}

public interface IfaceB {

void methodB();

}

public class Example implements IfaceA, IfaceB {

@override

public void methodA() {

...

}

@override

public void methodB() {

...

}

}

Example var0 = new Example();

IfaceA var1 = var0;

IfaceB var2 = var0;

But, before Java 8, interfaces could not contain any code, only constants and method declarations, so classes could not inherit functionality from them, as they could by extending a base class.

Thus while interfaces provided the polymorphic part of multiple inheritance, they lacked the functionality reuse part.

In Java 8 all that changed with addition of default and static methods to interfaces.

Now, an interface could contain code, and any class implementing it would inherit this functionality.

It appears that Java 9 is about to take this one step further: it will add private methods to interfaces!

Before this, everything in an interface had to be public.

This essentially erases any differences between interfaces and abstract classes, and allows multiple inheritance. But, being a back door feature, it still has some limitations compared to true multiple inheritance that is available in languages like Python and C++:

- You can not inherit any collection of classes together. Class writer must allow joined inheritance by implementing the class as interface.

- Unlike regular base classes, interfaces can not be instantiated on their own, even if all the methods of an interface have default implementations.

This can be easily worked around by creating a dummy class without any code that implements the interface. - There are no

protectedmethods.

Maybe Java 10 will add them…

But basically, after 8 major iterations of the language, you can finally have full blown multiple inheritance in Java.

Conclusion

These features have their official excuses:

Extension methods are supposed to be “syntactic sugar” for “helper” and utility classes.

Default method implementation is suppose to allow extending interfaces without breaking legacy code.

But whatever the original intentions and reasoning were, the fact remains: you can have C# code that calls instance methods on objects that are not part of the original object, and you can now have Java classes that inherit “is a” type and working code from multiple sources.

And I don’t think this is a bad thing.

As long as programmers use these tools correctly, it will make code better.

Fighting your users is always a bad idea, more so if your users are developers themselves.

Do you know of any other features like this that showed up in other languages?

Let me know in the comments or by email!

Putting it out there…

When I first discovered Linux and the world of Free Software, I was already programming in the Microsoft ecosystem for several years, both as a hobby, and for a living.

I thought switching to writing Linux programs was just a matter of learning a new API.

I was wrong! Very wrong!

I couldn’t find my way through the myriad of tools, build systems, IDEs and editors, frameworks and libraries.

I needed to change my thinking, and get things in order. Luckily, I found an excellent book that helped me do just that: “Beginning Linux Programming” from Wrox.

Chapter by chapter this book guided me from using the terminal, all the way up to building desktop GUI programs like I was used to on Windows.

But you can’t learn programming just by reading a book. You have to use what you learn immediately and build something, or you will not retain any knowledge!

And that is exactly what I did: as soon as I got far enough to begin compiling C programs, I started building “Terminal Bomber”.

In hindsight, the name sounds like a malware. But it is not. It’s just a game. It is a clone of “Mine sweeper” for the terminal, using ncurses library.

I got as far as making it playable, adding high scores and help screens. I even managed to use Autotools for the build system.

And then I lost interest and let it rot. With the TODO file still full of items.

I never even got to packaging it for distribution, even though I planned from the start to release it under GPL v3.

But now, in preparation for a series of posts about quickly and easily setting up HTTP server for IPC, I finally opened a Github account.

And to fill it with something, I decided to put up a repository for this forgotten project.

So here we go: https://github.com/lvmtime/term_bomber

Terminal Bomber finally sees the light of day!

“Release early release often” is a known FOSS mantra. This release is kind of late for me personally, but early in the apps life cycle.

Will anything become of this code? I don’t know. It would be great if someone at least runs it.

Sadly, this is currently the only project I published that is not built for a dead OS.

Just putting it out there… 😛

Security risks or panic mongering?

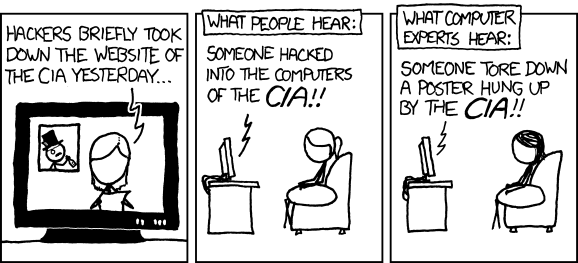

When you read about IT related security threats and breaches in mainstream media, it usually looks like this:

Tech sites and dedicated forums usually do a better job.

Last week, a new article by experts from Kaspersky Lab was doing the rounds on tech sites and forums.

In their blog, the researchers detail how they analyzed 7 popular “connected car” apps for Android phones, that allow opening car doors and some even allow starting the engine. They found 5 types of security flaws in all of them.

Since I am part of a team working on a similar app, a couple of days later this article showed up in my work email, straight from our IT security chief.

This made me think – how bad are these flaws, really?

Unlike most stuff the good folks at Kaspersky find and publish, this time it’s not actual exploits but only potential weaknesses that could lead to discovery of exploits, and personally, I don’t think that some are even weaknesses.

So, here is the list of problems, followed by my personal analysis:

- No protection against application reverse engineering

- No code integrity check

- No rooting detection techniques

- Lack of protection against overlaying techniques

- Storage of logins and passwords in plain text

I am not a security expert, like these guys, just a regular software developer, but I’d like to think I know a thing or two about what makes apps secure.

Lets start from the bottom:

Number 5 is a real problem and the biggest one on the list. Storing passwords as plain text is about the dumbest and most dangerous thing you can do to compromise security of your entire service, and doing so on a platform that gives you dedicated secure storage for credentials with no hassle whatsoever for your users, is just inexcusable!

It is true that on Android, application data gets some protection via file permissions by default, but this protection is not good enough for sensitive data like passwords.

However, not all of the apps on the list do this. Only two of the 7 store passwords unencrypted, and 4 others store login (presumably username) unencrypted.

Storing only the user name unprotected is not necessarily a security risk. Your email address is the username for your email account, but you give that out to everyone and some times publish it in the open.

Same goes for logins for many online services and games that are used as your public screen-name.

Next is number 4: overlay protection.

This one is interesting: as the Kaspersky researchers explain in their article, Android has API that allow one app to display arbitrary size windows with varying degrees of transparency over other apps.

This ability requires a separate permission, but users often ignore permissions.

This API has legitimate uses for accessibility and convenience, I even used it my self in several apps to give my users quick access from anywhere to some tasks they needed.

Monitoring which app is in foreground is also possible, but you would need to convince the user to set you up as an accessibility service, and that is not a simple task and can not be automated without gaining root access.

So here is the rub: there is a potential for stealing user credentials with this method, but to pull it off in a seamless way most users would not notice, is very difficult. And it requires a lot of cooperation from the user: first they must install your malicious app, then they must go in to settings, ignore some severe warnings, and set it up a certain way.

I am not a malware writer either, so maybe I am missing something, but it looks to me like there are other, much more convenient exploits out there, and I have yet to see this technique show up in the real world.

So if I had to guess – I’d say it is not a very big concern. Actually, if you got your app set up as accessibility service, you could still all text from device without the overlay trick, and I can’t think of a way to properly detect when a certain app is in use without this and without root.

No we finally get to the items on the list that aren’t really problems:

Number 3: root detection. Rooted device is not necessarily a compromised device. On the contrary – the only types of root you can possibly detect are the ones the user installed of his own free will, and that means a tech savvy user who knows how to protect his device from malware.

The whole cat and mouse game around root access to phones does more harm to security than letting users have official root access from the manufacturer, but this is a topic for a separate post.

If some app uses root exploit behind its users back, it will only be available to that app, and almost impossible to detect from another app, specially one that is not suppose to be a dedicated anti-malware tool.

Therefore, I see no reason to count this as a security flaw.

Number 2: Code integrity check. This is just an overkill for each app to roll out on its own.

Android already has mandatory cryptographic signing in place for all apps that validates the integrity of every file in the APK. In latest versions of Android, v2 of the signing method was added that also validates the entire archive as a whole (if you didn’t know this, APK is actually just a zip file).

So what is the point of an app trying to check its code from inside its code?

Since Android already has app isolation and signing on a system level, any malware that gets around this, and whose maker has reversed enough of the targeted app code to modify its binary in useful ways, should have no trouble bypassing any internal code integrity check.

The amount of effort on the side of the app developer trying to protect his app, vs the small amount of effort it would take to break this protection just isn’t worth it.

Plus, a bad implementation of such integrity check could do more harm then good, by introducing bugs and hampering users of legitimate copies of the app leading to an overall bad user experience.

And finally, the big “winner”, or is it looser?

Number 1 on the list: protection from reverse engineering.

Any decent security expert will tell you that “security by obscurity” does not work!

If all it takes to break your app is to know how it works, consider it broken from the start. The most secure operating systems in the world are based on open source components, and the algorithms for the most secure encryptions are public knowledge.

Revers engineering apps is also how security experts find the vulnerabilities so the app makers can fix them. It is how the information for the article I am discussing here was gathered!

Attempting to obfuscate the code only leads to difficult debugging, and increased chance of flaws and security holes in the app.

It can be considered an anti-pattern, which is why I am surprised it is featured at the top of the list of security flaws by some one like Kasperskys experts.

Lack of reverse engineering protection is the opposite of security flaw – it is a good thing that can help find real problems!

So there you have it. Two real security issues (maybe even one and a half) out of five, and two out of seven apps actually vulnerable to the biggest one.

So what do you think? Are the connected cars really in trouble, or are the issues found by the experts minor, and the article should have actually been a lot shorter?

Also, one small funny fact: even though the writers tried to hide which apps they tested, it is pretty clear from the blurred icons in the article that one of the apps is from Kia and another one has the Volvo logo.

Since what the researchers found were not actual vulnerabilities that can be exploited right away, but rather bad practices, it would be more useful to publish the identity of the problematic apps so that users could decide if they want to take the risk.

Just putting it out there that “7 leading apps for connected cars are not secure” is likely to cause unnecessary panic among those not tech savvy enough to read through and thoroughly understand the real implications of this discovery.

Beware Java’s half baked generics

Usually I don’t badmouth Java. I think its a very good programming language.

In fact, I tend to defend it in arguments on various forums.

Sure, it lacks features compared to some other languages, but then again throwing everything including a kitchen sink in to a language is not necessarily a good idea. Just look at how easy it is to get a horrible mess of code in C++ with single operator doing different things depending on context. Is &some_var trying to get address of a variable or a reference? And what does &&some_var do? It has nothing to do with the boolean AND operator!

So here we have a simple language friendly to new developers, which is good because there are lots of those using it on the popular Android platform.

Unfortunately, even the best languages have some implementation detail that will make you want to lynch their creators or just reap out your hair, depending on whether you externalize your violent tendencies or not.

Here is a short code example that demonstrates a bug that for about 5 minutes made me think I was high on something:

HashMap<Integer, String> map = new HashMap<>();

byte a = 42;

int b = a;

map.put(b, "The answer!");

if (map.containsKey(a))

System.out.println("The answer is: " + map.get(a));

else

System.out.println("What was the question?");

What do you expect this code to print?

Will it even compile?

Apparently it will, but the result will surprise anyone who is not well familiar with Java’s generic types.

Yes folks – the key will not be found and the message What was the question? will be printed.

Here is why:

The generic types in Java are not fully parameterized. Unlike a proper C++ template, some methods of generic containers take parameters of type Object, instead of the type the container instantiation was defined with.

For HashMap, even though it’s add is properly parameterized and will raise a compiler error if the wrong type key is used, the get and containsKey methods take a parameter of type Object and will not even throw a runtime exception if the wrong type is provided. They will simply return null or false respectively as if the key was simply not there.

The other part of the problem is that primitive types such as byte and int are second class citizens in Java. They are not objects like everything else and can not be used to parameterize generics.

They do have object equivalents named Byte and Integer but those don’t have proper operator overloading so are not convenient for all use cases.

Thus in the code sample above the variable a gets autoboxed to Byte, which as far as Java is concerned a completely different type that has nothing to do with Integer and therefore there is no way to search for Byte keys in Integer map.

A language that implements proper generics would have parameterized these methods so either a compilation error occurred or an implicit cast was made.

In Java, it is up to you as a programmer to keep you key type straight even between seemingly compatible types like various size integers.

In my case I was working with a binary protocol received from external device and the function filling up the map was not the same one reading from it, so it was not straight forward to align types everywhere. But in the end I did it and learned my lesson.

Maybe this long rant will help you too. At least until a version of Java gets this part right…

Android, Busybox and the GNU project

Richard Stallman, the father of the Free Software movement and the GNU project, always insists that people refer to some Linux based operating systems as “GNU/Linux”. This point is so important to him, he will refuse to grant an interview to anyone not willing to use the correct term.

There are people who don’t like this attitude. Some have even tried to “scientifically prove” that GNU project code comprises such a small part of a modern Linux distribution that it does not deserved to be mentioned in the name of such distributions.

Personally, I used to think that the GNU project deserved recognition for it’s crucial historical role in building freedom respecting operating systems, even if it was only a small part of a modern system.

But a recent experience proved to me that it is not about the amount of code lines or number of packages. And it is not a historical issue. There really is a huge distinction between Linux and GNU/Linux, but to notice it you have to work with a different kind of Linux. One that is not only stripped of GNU components, but of its approach to system design and user interface.

Say hello to Android. Or should I say Android/Linux…

Many people forget, it seems, that Linux is just a kernel. And as such, it is invisible to all users, advanced and novice alike. To interact with it, you need an interface, be it a text based shell or a graphical desktop.

So what happens when someone slaps a completely different user-space with a completely different set of interfaces on top of the Linux kernel?

Here is the story that prompted me to write this half rant half tip post:

My boss wanted to backup his personal data on his Android phone. This sounds like it should be simple enough to do, but the reality is quite the opposite.

In the Android security model, every application is isolated by having its own user (they are created sequentially and have names like app_123).

An application is given its own folder in the devices data partition where it is supposed to store its data such as configuration, user progress (for games) etc.

No application can access the folder of another application and read its data.

This makes sense from the security perspective, except for one major flaw: no 3rd party backup utility can ever be made. And there is no backup utility provided as part of the system.

Some device makers provide their own backup utilities, and starting with Android 4.0 there is a way to perform a backup through ADB (which is part of Android SDK), but this method is not designed for the average user and has several issues.

There is one way, an application on the device can create a proper backup: by gaining root privileges.

But Android is so “secure” it has no mechanism to allow the user to grant such privileges to an application, no matter how much he wants or needs to.

The solution of course, is to change the OS to add the needed capability, but how?

Usually, the owner of a stock Android device would look for a tool that exploits a security flaw in the system to gain root privileges. Some devices can be officially unlocked so a modified version of Android can be installed on them with root access already open.

The phone my boss has is somewhat unusual: it has a version of the OS designed for development and testing, so it has root but the applications on it do not have root.

What this confusing statement means is, that the ADB daemon is running with root privileges on the device allowing you to get a root shell on the phone from the PC and even remount the system partition as writable.

But, there is still no way for an application running on the device to gain root privileges, so when my boss tried to use Titanium Backup, he got a message that his device is not “rooted” and therefore the application will not work.

Like other “root” applications for Android, Titanium Backup needs the su binary to function. But stock Android does not have a su binary. In fact, it does not even have the cp command. Thats right – you can get a shell interface on Android that might look a little bit like the “regular Linux”, but if you want to copy a file you have to use cat.

This is something you will not see on a GNU/Linux OS, not even other Linux based OSs designed for phones such as Maemo or SHR.

Google wanted to avoid any GPL covered code in the user-space (i.e. anywhere they could get away with it), so not only did they not use a “real” shell (such as BASH) they didn’t even use Busybox which is the usual shell replacement in small and embedded systems. Instead, they created their own very limited (or as I call it neutered) version called “Toolbox”.

Fortunately, a lot of work has been done to remedy this, so it is not hard to find a Busybox binary ready made to run on Android powered ARM based device.

The trick is installing it. Instructions vary slightly from site to site, but I believe the following will work in most cases:

adb remount adb push busybox /system/bin adb shell chmod 6755 /system/bin/busybox adb shell busybox --install /system/bin

Note that your ADB must run as root on the device side!

The important part to notice here is line 3: you must set gid and uid bits on the busybox binary if you want it to function properly as su.

And no – I didn’t write the permissions parameter to chmod as digits to make my self look like a “1337 hax0r”. Android’s version of chmod does not accept letter parameters for permissions.

After doing the steps above I had a working busybox and a proper command shell on the phone, but the backup application still could not get root. When I installed a virtual terminal application on the phone and tried to run su manually I got the weirdest error: unknow user: root

How could this be? ls -l clearly showed files belonging to ‘root’ user. As GNU/Linux user I was used to more descriptive and helpful error messages.

I tried running ‘whoami’ from the ADB root shell, and got a similarly cryptic message: unknown uid 0

Clearly there was a root user with the proper UID 0 on the system, but busybox could not recognize it.

Googling showed that I was not the only one encountering this problem, but no solution was in sight. Some advised to reinstall busybox, others suggested playing with permissions.

Finally, something clicked: on a normal GNU/Linux system there is a file called passwd in etc folder. This file lists all the users on the system and some information for each user such as their home folder and login shell.

But Android does not use this file, and so it does not exist by default.

Yet another difference.

So I did the following:

adb shell # echo 'root::0:0:root:/root:/system/sh' >/etc/passwd

This worked like a charm and finally solved the su problem for the backup application. My boss could finally backup and restore all his data on his own, directly on the phone and without any special trickery.

Some explanation of the “magic” line:

In the passwd file each line represents a single user, and has several ‘fields’ separated by colons (:). You can read in detail about it here.

I copied the line for the root user from my PC, with some slight changes:

The second field is the password field. I left it blank so the su command will not prompt for password.

This is a horrible practice in terms of security, but on Android there is no other choice, since applications attempting to use the su command do not prompt for password.

There are applications called SuperUser and SuperSU that try to ask user permission before granting root privileges, but they require a special version of the su binary which I was unable to install.

The last field is the “login shell” which on Android is /system/sh

The su binary must be able to start a shell for the application to execute its commands.

Note, this is actually a symlink to the /system/mksh binary, and you may want to redirect it to busybox.

So this is my story of making one Android/Linux device a little more GNU/Linux device.

I took me a lot of time, trial and error and of course googling to get this done, and reminded me again that the saying “Linux is Linux” has its limits and that we should not take the GNU for granted.

It is an important part of the OS I use both at home and at work, not only in terms of components but also in terms of structure and behavior.

And it deserves to be part of the OS classification, if for no other reason than to distinguish the truly different kinds of Linux that are out there.

One of these things is not like the others!

Please look at the following picture:

These are “smart” phones I own.

All of them have different hardware specs, but one is truly different from the others.

Can you tell which one?

It’s the one on the right – i-mate Jamin.

It is also the first “smart” phone that I ever owned.

What makes it different from the others?

The fact that it is the only one in the bunch that does not run on Free Software.

I was inspired to take this picture and put it on my blog by another post (in Hebrew), that talks about black, round corner rectangles and the recent madness surrounding them.

But I am not going to write about that.

There are already plenty of voices shouting about it all over the Internet, and I have nothing constructive to add.

Instead, I will introduce you to my lovely phone collection, which contributed a lot to my hobby and professional programming.

And we will start with the historical sample on the right: i-mate Jamin. (specs)

Back in early 2006, when this device came out, “smartphone” was still a registered trademark of Microsoft, the name they chose for the version of their Windows CE based mobile OS for devices with no touchscreen. (The touchscreen version was then called Windows Mobile Phone Edition)

Such devices were for geeks and hard core businessmen who had to be glued to their office 24/7.

But despite having a proprietery OS, this was a very open device: you could run any program on it (we didn’t call them “apps” then), and you could develop for them without the need to register or pay.

It didn’t matter what country you were from, or how old you were. The complete set of tools was available as a free download from Microsoft’s site.

And the OS allowed you to do a lot of things to it: like its desktop cousin, it completely lacked security, you could even overwrite, or more precisely “overshadow” OS files that were in ROM with a copy with the same name stored in user accessible NAND flash (or RAM on older devices).

The system API was almost identical to the Win32 API, which was (and still is) very common on the desktop, so if you knew how to write a program for your Windows powered PC, you knew how to write a program for your phone.

Unlike the systems we are used to today, Windows Mobile had no built in store.

You were on your own when it came to distributing your software, though there were several sites that acted much like the application stores do today: they sold your program for a commission.

But that too meant freedom: no commercial company was dictating morals to the developers or telling them that their program had no right to exist because it “confused users” or simply competed with that company’s own product.

So even though the OS brought with it most of the diseases common to desktop versions of Windows, it gave developers a free range, and thus had a thriving software ecosystem, until MS killed it off in a futile attempt to compete with Apple’s iOS and Google’s Android by taking the worst aspects of both.

The second phone from the right is the Neo 1973.

It was so named because 1973 was the year the first cellular call was made.

I got this device in 2008. By that time, I learned a lot about software freedom, so when I heard about a completely free (as in freedom of speech) phone, I just had to have it.

It wasn’t easy: it could only be bough directly from the company, which meant international shipping and a lot of bureaucracy with the ministry of communication that required special approval over every imported cellphone.

I was particularly concerned because this was not a commercially available model, despite having FCC certification, so it was possible that I could not get it through customs as a private citizen.

In the end, the problem was solved, though not before customs fees and added UPS charges almost doubled the cost of the device.

It felt great to have it. I never had such complete freedom with a phone before.

But then I realized – I had no idea what to do with this freedom! Developing for the OpenMoko Linux distribution (later, the project switched to SHR) was very different from developing for Windows.

I had a lot to learn, and in the end, I wound up making only one usable program for my two Neo phones: the screen rotate.

One of the things that amazed me about the OpenMoko project was, that even though the software and hardware were experimental and in early stage of development, in many ways they were much better then the commercial Windows Mobile that was being sold for years to many phone makers.

For example, OpenMoko had perfect BiDi support needed for Hebrew and Arabic languages, as well as fonts for those languages shipped with the OS.

This is something MS never did for Windows Mobile, despite having a large R&D center in Israel for almost two decades, and having a large market in other countries that write right-to-left languages.

Also, the Internet browser, though slow, was much more advanced then the one on WM, and even came close to passing the Asid2 test.

The only trouble was, I could never get the microphone working. It didn’t really matter, since I wanted the phone for development and testing, and didn’t intend to carry it around with me for daily use.

Which brings us to the next phone in the collection: the Neo Freerunner.

This was the second device from the OpenMoko project, the more powerful successor to the Neo 1973.

At first, I swore I would not by it. There just wasn’t enough difference between it and the original. Sure, it had WiFi and a faster processor, but is that really a reason to by another phone?

But by that time, my trusty old Jamin was getting really old, it developed some hardware problems and even with a new battery would not charge well.

I had a lot of choice in smartphones, working for a company that developed software for them, yet I could not bare the thought of buying yet another non-free phone.

So in the end I broke, and bought the Freerunner, mostly for that nice feeling of carrying a tiny computer in my pocket, made completely with Free Software and Open Hardware.

Thanks to Doron Ofek who put a lot of effort in to advancing the OpenMoko project (and other Free Software projects) in Israel, getting the second device was much easier.

And so it became my primary and only cellphone for the next three years.

I don’t think there are too many people in the world who can honestly say they used OpenMoko phone as their primary cellphone, with no backup, but I was one of them.

Flashing a brand new OS twice a month or more (if I had time) was just part of the fun.

Sadly, all good things come to an end. The life expectancy of a smartphone is 18 month at best. I was seeing powerful Android based devices all around me, with large screens, fast processors, and, most importantly – 3G data (I spend a lot of time out of WiFi range).

And I wanted a stable device. As much as I hated to admit it I needed a break from living with a prototype phone and a rapidly changing OS.

But I wasn’t ready to loose my freedom. And I didn’t want to completely surrender my privacy.

Most Android devices need to be hacked just to get root on your own system. And even though the OS is Free Software, most of the “apps”, including built in ones, are proprietery.

And of course, Google is trying to milk every last bit of your personal information it can, and trying to keep them from doing it on Android is very uncomfortable, though definitely possible.

This just won’t do.

Finally, I found a perfect compromise:

My current phone – Nokia N900 (spec).

It was far from being a new device, when I finally ordered one thorough eBay.

It had a major downside compared to any Android device – it had a resistive instead of capacitive touchscreen.

Yet it was the perfect merger, borrowing from all worlds:

It runs mostly on free software, with a real GNU/Linux distribution under the hood, unlike Android which uses a modified Linux kernel, but has little in common with what most people call “Linux”.

It has a proper package manager, offering a decent selection of free software, and updates for all system components including special kernels, but also connected to Nokia’s OVI store.

It even came with a terminal emulator already installed.

Unlike the OpemMoko project, this was a finished and polished device. With a stable, simple, useful and convenient interface, widgets, and all applications working satisfactory out of the box.

It even has the flash plugin, which, though a horrible piece of proprietery software on which grave I will gladly dance, is still needed sometimes to access some sites.

So here I am now, with an outdated, but perfectly usable phone, that can do just about anything from connecting USB peripherals to mounting NFS shares.

It is perfect for me, despite it’s slightly bulky size and relatively small 3.5 inch screen.

But I know that no phone lasts for ever. Some day, the N900 will have to be retired, yet I see no successor on the horizon.

With Microsoft and Apple competing in “who can take away most user rights and get away with it”, and Android devices still containing plenty of locks, restrictions and privacy issues, I don’t know what I will buy when the time comes.

Who knows, maybe with luck and a lot of effort by some very smart people, the GTA04 will blossom in to something usable on a daily basis.

Or maybe Intel will get off their collective behinds and put out a phone with whatever Meego/Maemo/Moblin has morphed in to.

Even Mozilla is pushing out a Mobile OS of sorts, so who knows…

What do you think?

eval is evil!

Last week a friend of mine got an email pretending to be from Linked-In.

It looked suspicious so she forwarded it to me for inspection.

A quick look at the HTML attachment showed that it contained some very fishy JavaScript.

One notable part of it was a large array of floating point numbers, positive and negative.

As some of you might have guessed, this array actually represented some more scrambled JavaScript.

Now, I am not a security expert, but I was curious what this thing did. I know there is some tool to test run JavaScript, but I did not remember what it was called, so I just run Python in interactive mode to make a quick loop and unscramble the floating point array.

What I found was JavaScript redirecting the browser to a very suspicious looking domain.

Downloading the content of the URL resulted in more JavaScript, this time with a very long sting (over 54000 bytes long!).