Archive

Security risks or panic mongering?

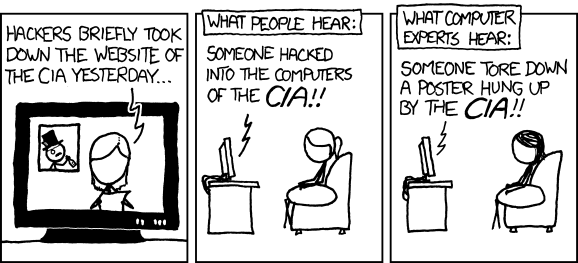

When you read about IT related security threats and breaches in mainstream media, it usually looks like this:

Tech sites and dedicated forums usually do a better job.

Last week, a new article by experts from Kaspersky Lab was doing the rounds on tech sites and forums.

In their blog, the researchers detail how they analyzed 7 popular “connected car” apps for Android phones, that allow opening car doors and some even allow starting the engine. They found 5 types of security flaws in all of them.

Since I am part of a team working on a similar app, a couple of days later this article showed up in my work email, straight from our IT security chief.

This made me think – how bad are these flaws, really?

Unlike most stuff the good folks at Kaspersky find and publish, this time it’s not actual exploits but only potential weaknesses that could lead to discovery of exploits, and personally, I don’t think that some are even weaknesses.

So, here is the list of problems, followed by my personal analysis:

- No protection against application reverse engineering

- No code integrity check

- No rooting detection techniques

- Lack of protection against overlaying techniques

- Storage of logins and passwords in plain text

I am not a security expert, like these guys, just a regular software developer, but I’d like to think I know a thing or two about what makes apps secure.

Lets start from the bottom:

Number 5 is a real problem and the biggest one on the list. Storing passwords as plain text is about the dumbest and most dangerous thing you can do to compromise security of your entire service, and doing so on a platform that gives you dedicated secure storage for credentials with no hassle whatsoever for your users, is just inexcusable!

It is true that on Android, application data gets some protection via file permissions by default, but this protection is not good enough for sensitive data like passwords.

However, not all of the apps on the list do this. Only two of the 7 store passwords unencrypted, and 4 others store login (presumably username) unencrypted.

Storing only the user name unprotected is not necessarily a security risk. Your email address is the username for your email account, but you give that out to everyone and some times publish it in the open.

Same goes for logins for many online services and games that are used as your public screen-name.

Next is number 4: overlay protection.

This one is interesting: as the Kaspersky researchers explain in their article, Android has API that allow one app to display arbitrary size windows with varying degrees of transparency over other apps.

This ability requires a separate permission, but users often ignore permissions.

This API has legitimate uses for accessibility and convenience, I even used it my self in several apps to give my users quick access from anywhere to some tasks they needed.

Monitoring which app is in foreground is also possible, but you would need to convince the user to set you up as an accessibility service, and that is not a simple task and can not be automated without gaining root access.

So here is the rub: there is a potential for stealing user credentials with this method, but to pull it off in a seamless way most users would not notice, is very difficult. And it requires a lot of cooperation from the user: first they must install your malicious app, then they must go in to settings, ignore some severe warnings, and set it up a certain way.

I am not a malware writer either, so maybe I am missing something, but it looks to me like there are other, much more convenient exploits out there, and I have yet to see this technique show up in the real world.

So if I had to guess – I’d say it is not a very big concern. Actually, if you got your app set up as accessibility service, you could still all text from device without the overlay trick, and I can’t think of a way to properly detect when a certain app is in use without this and without root.

No we finally get to the items on the list that aren’t really problems:

Number 3: root detection. Rooted device is not necessarily a compromised device. On the contrary – the only types of root you can possibly detect are the ones the user installed of his own free will, and that means a tech savvy user who knows how to protect his device from malware.

The whole cat and mouse game around root access to phones does more harm to security than letting users have official root access from the manufacturer, but this is a topic for a separate post.

If some app uses root exploit behind its users back, it will only be available to that app, and almost impossible to detect from another app, specially one that is not suppose to be a dedicated anti-malware tool.

Therefore, I see no reason to count this as a security flaw.

Number 2: Code integrity check. This is just an overkill for each app to roll out on its own.

Android already has mandatory cryptographic signing in place for all apps that validates the integrity of every file in the APK. In latest versions of Android, v2 of the signing method was added that also validates the entire archive as a whole (if you didn’t know this, APK is actually just a zip file).

So what is the point of an app trying to check its code from inside its code?

Since Android already has app isolation and signing on a system level, any malware that gets around this, and whose maker has reversed enough of the targeted app code to modify its binary in useful ways, should have no trouble bypassing any internal code integrity check.

The amount of effort on the side of the app developer trying to protect his app, vs the small amount of effort it would take to break this protection just isn’t worth it.

Plus, a bad implementation of such integrity check could do more harm then good, by introducing bugs and hampering users of legitimate copies of the app leading to an overall bad user experience.

And finally, the big “winner”, or is it looser?

Number 1 on the list: protection from reverse engineering.

Any decent security expert will tell you that “security by obscurity” does not work!

If all it takes to break your app is to know how it works, consider it broken from the start. The most secure operating systems in the world are based on open source components, and the algorithms for the most secure encryptions are public knowledge.

Revers engineering apps is also how security experts find the vulnerabilities so the app makers can fix them. It is how the information for the article I am discussing here was gathered!

Attempting to obfuscate the code only leads to difficult debugging, and increased chance of flaws and security holes in the app.

It can be considered an anti-pattern, which is why I am surprised it is featured at the top of the list of security flaws by some one like Kasperskys experts.

Lack of reverse engineering protection is the opposite of security flaw – it is a good thing that can help find real problems!

So there you have it. Two real security issues (maybe even one and a half) out of five, and two out of seven apps actually vulnerable to the biggest one.

So what do you think? Are the connected cars really in trouble, or are the issues found by the experts minor, and the article should have actually been a lot shorter?

Also, one small funny fact: even though the writers tried to hide which apps they tested, it is pretty clear from the blurred icons in the article that one of the apps is from Kia and another one has the Volvo logo.

Since what the researchers found were not actual vulnerabilities that can be exploited right away, but rather bad practices, it would be more useful to publish the identity of the problematic apps so that users could decide if they want to take the risk.

Just putting it out there that “7 leading apps for connected cars are not secure” is likely to cause unnecessary panic among those not tech savvy enough to read through and thoroughly understand the real implications of this discovery.

![[FSF Associate Member]](https://i0.wp.com/static.fsf.org/nosvn/associate/fsf-11332.png)